Jaguar Land Rover开发自主学习智能车

作者 AbelJiang | 2014-07-16 09:46 | 类型 机器学习, 行业动感 | Comments Off

|

原文译自:TheHindu.com 印度塔塔集团旗舰车品牌Jaguar Land Rover(可以翻成捷豹路虎?)研发中的智能车能够提供个性化驾驶体验,通过提高驾驶者注意力来帮助减少事故的发生。 | |

机器学习应用–切影片尿点

作者 AbelJiang | 2014-07-14 23:07 | 类型 机器学习 | Comments Off

|

原文来自:Business Insider Just about every device has a camera in it, so we’re shooting more and more video than we have ever before. If only all of it were worth watching. The latest application of machine learning was developed by Eric P. Xing, professor of machine learning from Carnegie Mellon University, and Bin Zhao, a Ph.D. student in the Machine Learning Department. It’s called LiveLight, and it can help automate the reduction of videos to just their good parts. LiveLight takes a long piece of source footage and “evaluates action in the video, looking for visual novelty and ignoring repetitive or eventless sequences, to create a summary that enables a viewer to get the gist of what happened.” Put another way, it watches your movie and edits out the boring stuff. This all happens with just one pass through said video — LiveLight never works backwards. You’re left with something more like a highlight reel than the too-long original video pictured on the left above. LiveLight is robust enough to run on a standard laptop and is powerful enough to process an hour of video in one or two hours. | |

The Trick That Makes Google’s Self-Driving Cars Work

作者 AbelJiang | 2014-06-12 09:53 | 类型 机器学习, 行业动感 | Comments Off

|

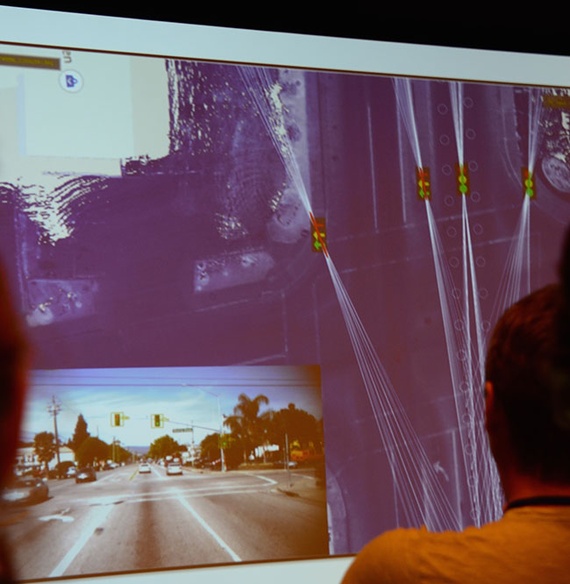

本文转载自http://www.theatlantic.com 原作者:Alexis C. Madrigal Google’s self-driving cars can tour you around the streets of Mountain View, California. I know this. I rode in one this week. I saw the car’s human operator take his hands from the wheel and the computer assume control. “Autodriving,” said a woman’s voice, and just like that, the car was operating autonomously, changing lanes, obeying traffic lights, monitoring cyclists and pedestrians, making lefts. Even the way the car accelerated out of turns felt right. It works so well that it is, as The New York Times‘ John Markoff put it, “boring.” The implications, however, are breathtaking. Perfect, or near-perfect, robotic drivers could cut traffic accidents, expand the carrying capacity of the nation’s road infrastructure, and free up commuters to stare at their phones, presumably using Google’s many services. But there’s a catch. Today, you could not take a Google car, set it down in Akron or Orlando or Oakland and expect it to perform as well as it does in Silicon Valley. Here’s why: Google has created a virtual track out of Mountain View.

The key to Google’s success has been that these cars aren’t forced to process an entire scene from scratch. Instead, their teams travel and map each road that the car will travel. And these are not any old maps. They are not even the rich, road-logic-filled maps of consumer-grade Google Maps. They’re probably best thought of as ultra-precise digitizations of the physical world, all the way down to tiny details like the position and height of every single curb. A normal digital map would show a road intersection; these maps would have a precision measured in inches. But the “map” goes beyond what any of us know as a map. “Really, [our maps] are any geographic information that we can tell the car in advance to make its job easier,” explained Andrew Chatham, the Google self-driving car team’s mapping lead. “We tell it how high the traffic signals are off the ground, the exact position of the curbs, so the car knows where not to drive,” he said. “We’d also include information that you can’t even see like implied speed limits.” Google has created a virtual world out of the streets their engineers have driven. They pre-load the data for the route into the car’s memory before it sets off, so that as it drives, the software knows what to expect. “Rather than having to figure out what the world looks like and what it means from scratch every time we turn on the software, we tell it what the world is expected to look like when it is empty,” Chatham continued. “And then the job of the software is to figure out how the world is different from that expectation. This makes the problem a lot simpler.” While it might make the in-car problem simpler, but it vastly increases the amount of work required for the task. A whole virtual infrastructure needs to be built on top of the road network! Very few companies, maybe only Google, could imagine digitizing all the surface streets of the United States as a key part of the solution of self-driving cars. Could any car company imagine that they have that kind of data collection and synthesis as part of their core competency? Whereas, Chris Urmson, a former Carnegie Mellon professor who runs Google’s self-driving car program, oozed confidence when asked about the question of mapping every single street where a Google car might want to operate. “It’s one of those things that Google, as a company, has some experience with our Google Maps product and Street View,” Urmson said. “We’ve gone around and we’ve collected this data so you can have this wonderful experience of visiting places remotely. And it’s a very similar kind of capability to the one we use here.” So far, Google has mapped 2,000 miles of road. The US road network has something like 4 million miles of road. “It is work,” Urmson added, shrugging, “but it is not intimidating work.” That’s the scale at which Google is thinking about this project. All this makes sense within the broader context of Google’s strategy. Google wants to make the physical world legible to robots, just as it had to make the web legible to robots (or spiders, as they were once known) so that they could find what people wanted in the pre-Google Internet of yore. about it, the more the goddamn Googleyness of the thing stands out. In fact, it might be better to stop calling what Google is doing mapping, and come up with a different verb to suggest the radical break they’ve made with previous ideas of maps. I’d say they’re crawling the world, meaning they’re making it legible and useful to computers. Self-driving cars sit perfectly in-between Project Tango—a new effort to “give mobile devices a human-scale understanding of space and motion”—and Google’s recent acquisition spree of robotics companies. Tango is about making the “human-scale” world understandable to robots and the robotics companies are about creating the means for taking action in that world. The more you think about it, the more the goddamn Googleyness of the thing stands out: the ambition, the scale, and the type of solution they’ve come up with to this very hard problem. What was a nearly intractable “machine vision” problem, one that would require close to human-level comprehension of streets, has become a much, much easier machine vision problem thanks to a massive, unprecedented, unthinkable amount of data collection. Last fall, Anthony Levandowski, another Googler who works on self-driving cars, went to Nissan for a presentation that immediately devolved into a Q&A with the car company’s Silicon Valley team. The Nissan people kept hectoring Levandowski about vehicle-to-vehicle communication, which the company’s engineers (and many in the automotive industry) seemed to see as a significant part of the self-driving car solution. He parried all of their queries with a speed and confidence just short of condescension. “Can we see more if we can use another vehicle’s sensors to see ahead?” Levandowski rephrased one person’s question. “We want to make sure that what we need to drive is present in everyone’s vehicle and sharing information between them could happen, but it’s not a priority.” What the car company’s people couldn’t or didn’t want to understand was that Google does believe in vehicle-to-vehicle communication, but serially over time, not simultaneously in real-time. After all, every vehicle’s data is being incorporated into the maps. That information “helps them cheat, effectively,” Levandowski said. With the map data—or as we might call it, experience—all the cars need is their precise position on a super accurate map, and they can save all that parsing and computation (and vehicle to vehicle communication). There’s a fascinating parallel between what Google’s self-driving cars are doing and what the Andreesen Horowitz-backed startup Anki is doing with its toy car racing game. When you buy Anki Drive, they sell you a track on which the cars race, which has positioning data embedded. The track is the physical manifestation of a virtual racing map. Last year, Anki CEO (and like Urmson, a Carnegie Mellon robotics guy) Boris Sofman told me knowing the racing environment in advance allows them to more easily sync the state of the virtual world in which their software is running with the physical world in which the cars are driving. “We are able to turn the physical world into a virtual world,” Sofman said. “We can take all these physical characters and abstract away everything physical about them and treat them as if they were virtual characters in a video game on the phone.”

Of course, when there are bicyclists and bad drivers involved, navigating the hybrid virtual-physical world of Mountain View is not easy: the cars still have to "race" around the track, plotting trajectories and avoiding accidents. The Google cars are not dumb machines. They have their own set of sensors: radar, a laser spinning atop the Lexus SUV, and a suite of cameras. And they have some processing on board to figure out what routes to take and avoid collisions. This is a hard problem, but Google is doing the computation with what Levandowski described at Nissan as a "desktop" level system. (The big computation and data processing are done by the teams back at Google's server farms.) What that on-board computer does first is integrate the sensor data. It takes the data from the laser and the cameras and integrates them into a view of the world, which it then uses to orient itself (with the rough guidance of GPS) in virtual Mountain View. "We can align what we're seeing to what's stored on the map. That allows us to very accurately—within a few centimeters—position ourselves on the map," said Dmitri Dolgov, the self-driving car team's software lead. "Once we know where we are, all that wonderful information encoded in our maps about the geometry and semantics of the roads becomes available to the car."

Once they know where they are in space, the cars can do the work of watching for and modeling the behavior of dynamic objects like other cars, bicycles, and pedestrians. Here, we see another Google approach. Dolgov's team uses machine learning algorithms to create models of other people on the road. Every single mile of driving is logged, and that data fed into computers that classify how different types of objects act in all these different situations. While some driver behavior could be hardcoded in ("When the lights turn green, cars go"), they don't exclusively program that logic, but learn it from actual driver behavior. In the way that we know that a car pulling up behind a stopped garbage truck is probably going to change lanes to get around it, having been built with 700,000 miles of driving data has helped the Google algorithm to understand that the car is likely to do such a thing. Most driving situations are not hard to comprehend, but what about the tough ones or the unexpected ones? In Google's current process, a human driver would take control, and (so far) safely guide the car. But fascinatingly, in the circumstances when a human driver has to take over, what the Google car would have done is also recorded, so that engineers can test what would have happened in extreme circumstances without endangering the public. So, each Google car is carrying around both the literal products of previous drives—the imagery and data captured from crawling the physical world—as well as the computed outputs of those drives, which are the models for how other drivers might behave.

There is, at least in an analogical sense, a connection between how the Google cars work and how our own brains do. We think about the way we see as accepting sensory input and acting accordingly. Really, our brains are making predictions all the time, which guide our perception. The actual sensory input—the light falling on retinal cells—is secondary to the prior experience that we've built into our brains through years of experience being in the world. That Google's self-driving cars are using these principles is not surprising. That they are having so much success doing so is. Peter Norvig, the head of AI at Google, and two of his colleagues coined the phrase "the unreasonable effectiveness of data" in an essay to describe the effect of huge amounts of data on very difficult artificial intelligence problems. And that is exactly what we're seeing here. A kind of Googley mantra concludes the Norvig essay: "Now go out and gather some data, and see what it can do." Even if it means continuously and neverendingly driving 4 million miles of roads with the most sophisticated cars on Earth and then hand-massaging that data—they'll do it. That's the unreasonable effectiveness of Google. | |

关于谷歌利用人工智能改善数据中心新闻的一点感悟

作者 AbelJiang | 2014-06-04 10:34 | 类型 机器学习, 行业动感 | Comments Off

|

虽然首席发的新闻里已经有了Jim Gao这篇White Paper的链接,小编还是想说一些关于这个机器学习应用的点。大家如果看了White Paper会看见里面Reference大部分来自Andrew Ng教授在Coursera教授的机器学习课程。我在Linkedin上看了一下他的资料,Jim Gao是在UCB读的机械和工业设计,还有环境科学,所以关于论文里用到的机器学习知识大部分来自于Coursera上的这门课。 | |

Andrew Ng加盟百度

作者 AbelJiang | 2014-05-26 21:19 | 类型 机器学习 | 2条用户评论 »

|

5月18日,在百度位于加州Sunnyvale的百度美国研发中心新址启动仪式上,斯坦福教授Andrew Ng被任命为百度首席科学家,全面负责百度研究院。下面部分内容,是在Quora上收集的一些关于Andrew的问答:

| |

DeepMind公司研究–利用深度强化学习教计算机玩小游戏

作者 AbelJiang | 2014-05-19 19:45 | 类型 Deep Learning, 机器学习 | Comments Off

|

去年,Google买过一个机器学习的初创公司,叫DNNresearch。这个小公司是有多伦多大学的Geoffrey Hinton教授和他的两位研究生共同建立的。Geoffrey Hinton教授算是神经网络领域男神级的人物了,贴一下他在Coursera开过的神经网络课程。今年,Google又花了将近五亿美刀买下同样研究机器学习的初创公司DeepMind。Facebook,以及雅虎等公司也曾以不菲的价格买过研究机器学习的初创公司,抢人的节奏啊。下面贴一下DeepMind在被收购之前比较有名的成果,教计算机玩小游戏。详情戳图。 | |

谷歌新专利–利用机器学习优化手机消息通知顺序

作者 AbelJiang | 2014-05-16 18:44 | 类型 机器学习, 行业动感 | Comments Off

|

手机经常会从不同的应用中接收到各种提示信息,比如短信,邮件,未接电话,闹铃,地理位置提醒,以及第三方应用的各种通知。多数手机都会在有新通知的时候提醒用户,但是无时无刻的夹杂广告的提醒是很烦人的,于是一些新近的系统允许用户设定通知优先级,以期改变通知的呈现顺序。但是这些系统多数缺乏智能排序的能力,而谷歌的这项新专利提供的解决方案想要解决的就是这个问题–提供给用户一个智能的系统,无需手动设置就能通过学习算法发现那些对用户重要的通知,并自动设置优先级。详情戳图。 | |

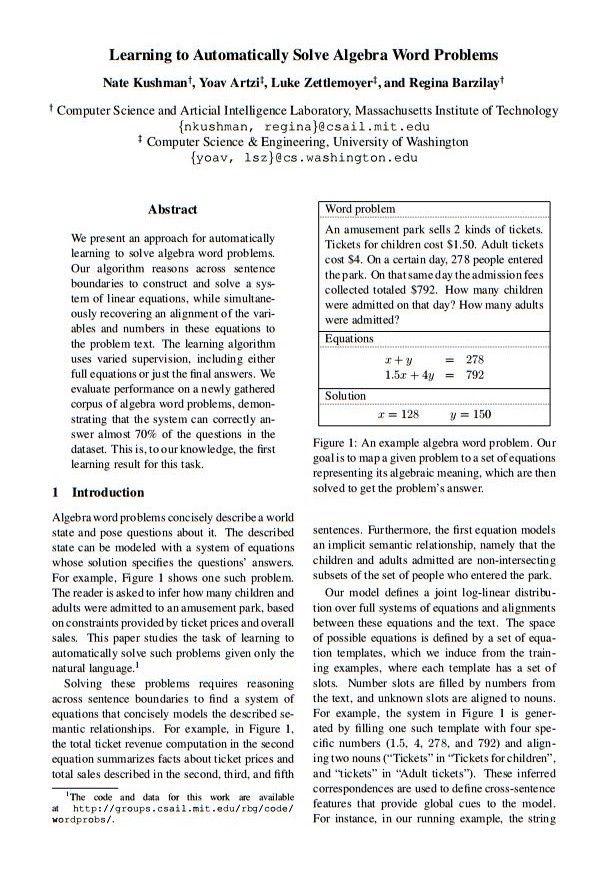

MIT新研究–计算机系统自动解决代数应用题

作者 AbelJiang | 2014-05-10 09:17 | 类型 机器学习, 行业动感 | Comments Off

|

近日,MIT计算机科学与人工智能实验室的研究者们,联合华盛顿大学的同事一起,开发了一个新的计算机系统,用于自动解决代数课上的常见的语言描述的应用题。

| |

From Bandits to Monte-Carlo Tree Search: The Optimistic Principle Applied to Optimization and Planning

作者 AbelJiang | 2014-04-28 10:52 | 类型 机器学习, 行业动感 | Comments Off

Visual-Textual Joint Relevance Learning for Tag-Based Social Image Search

作者 AbelJiang | 2014-04-28 10:47 | 类型 机器学习 | Comments Off

|

随着社交媒体网络的流行,研究者们对基于标签的社交图片搜索研究投入了很多精力。但是多数现有的方法都没能同时的利用图像和文本信息。这篇论文提供了一种能够同时利用图像和文本信息来判断图像的相关性的方法。对相关性的估计是由超图学习方法来实现的。详情请看论文内容。 | |