Google . Excellent Papers for 2011

作者 陈怀临 | 2012-10-31 16:12 | 类型 科技普及 | 3条用户评论 »

|

Posted by Corinna Cortes and Alfred Spector, Google Research Googlers across the company actively engage with the scientific community by publishing technical papers, contributing open-source packages, working on standards, introducing new APIs and tools, giving talks and presentations, participating in ongoing technical debates, and much more. Our publications offer technical and algorithmic advances, feature aspects we learn as we develop novel products and services, and shed light on some of the technical challenges we face at Google. In an effort to highlight some of our work, we periodically select a number of publications to be featured on this blog. We first posted a set of papers on this blog in mid-2010 and subsequently discussed them in more detail in the following blog postings. In a second round, we highlighted new noteworthy papers from the later half of 2010. This time we honor the influential papers authored or co-authored by Googlers covering all of 2011 — covering roughly 10% of our total publications. It’s tough choosing, so we may have left out some important papers. So, do see the publications list to review the complete group. In the coming weeks we will be offering a more in-depth look at these publications, but here are some summaries: Audio processing “Cascades of two-pole–two-zero asymmetric resonators are good models of peripheral auditory function”, Richard F. Lyon,Journal of the Acoustical Society of America, vol. 130 (2011), pp. 3893-3904. Electronic Commerce and Algorithms “Online Vertex-Weighted Bipartite Matching and Single-bid Budgeted Allocations”, Gagan Aggarwal, Gagan Goel, Chinmay Karande, Aranyak Mehta, SODA 2011. “Milgram-routing in social networks”, Silvio Lattanzi, Alessandro Panconesi, D. Sivakumar, Proceedings of the 20th International Conference on World Wide Web, WWW 2011, pp. 725-734. “Non-Price Equilibria in Markets of Discrete Goods”, Avinatan Hassidim, Haim Kaplan, Yishay Mansour, Noam Nisan, EC, 2011. HCI “From Basecamp to Summit: Scaling Field Research Across 9 Locations”, Jens Riegelsberger, Audrey Yang, Konstantin Samoylov, Elizabeth Nunge, Molly Stevens, Patrick Larvie, CHI 2011 Extended Abstracts. “User-Defined Motion Gestures for Mobile Interaction”, Jaime Ruiz, Yang Li, Edward Lank, CHI 2011: ACM Conference on Human Factors in Computing Systems, pp. 197-206. Information Retrieval “Reputation Systems for Open Collaboration”, B.T. Adler, L. de Alfaro, A. Kulshrestra, I. Pye, Communications of the ACM, vol. 54 No. 8 (2011), pp. 81-87. Machine Learning and Data Mining “Domain adaptation in regression”, Corinna Cortes, Mehryar Mohri, Proceedings of The 22nd International Conference on Algorithmic Learning Theory, ALT 2011. “On the necessity of irrelevant variables”, David P. Helmbold, Philip M. Long, ICML, 2011 “Online Learning in the Manifold of Low-Rank Matrices”, Gal Chechik, Daphna Weinshall, Uri Shalit, Neural Information Processing Systems (NIPS 23), 2011, pp. 2128-2136. Machine Translation “Training a Parser for Machine Translation Reordering”, Jason Katz-Brown, Slav Petrov, Ryan McDonald, Franz Och, David Talbot, Hiroshi Ichikawa, Masakazu Seno, Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing (EMNLP ’11). “Watermarking the Outputs of Structured Prediction with an application in Statistical Machine Translation”, Ashish Venugopal,Jakob Uszkoreit, David Talbot, Franz Och, Juri Ganitkevitch, Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing (EMNLP). “Inducing Sentence Structure from Parallel Corpora for Reordering”, John DeNero, Jakob Uszkoreit, Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing (EMNLP). Multimedia and Computer Vision “Kernelized Structural SVM Learning for Supervised Object Segmentation”, Luca Bertelli, Tianli Yu, Diem Vu, Burak Gokturk,Proceedings of IEEE Conference on Computer Vision and Pattern Recognition 2011. “Auto-Directed Video Stabilization with Robust L1 Optimal Camera Paths”, Matthias Grundmann, Vivek Kwatra, Irfan Essa,IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2011). “The Power of Comparative Reasoning”, Jay Yagnik, Dennis Strelow, David Ross, Ruei-Sung Lin, International Conference on Computer Vision (2011). NLP “Unsupervised Part-of-Speech Tagging with Bilingual Graph-Based Projections”, Dipanjan Das, Slav Petrov, Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics (ACL ’11), 2011, Best Paper Award. Networks “TCP Fast Open”, Sivasankar Radhakrishnan, Yuchung Cheng, Jerry Chu, Arvind Jain, Barath Raghavan, Proceedings of the 7th International Conference on emerging Networking EXperiments and Technologies (CoNEXT), 2011. “Proportional Rate Reduction for TCP”, Nandita Dukkipati, Matt Mathis, Yuchung Cheng, Monia Ghobadi, Proceedings of the 11th ACM SIGCOMM Conference on Internet Measurement 2011, Berlin, Germany – November 2-4, 2011. Security and Privacy “Automated Analysis of Security-Critical JavaScript APIs”, Ankur Taly, Úlfar Erlingsson, John C. Mitchell, Mark S. Miller, Jasvir Nagra, IEEE Symposium on Security & Privacy (SP), 2011. “App Isolation: Get the Security of Multiple Browsers with Just One”, Eric Y. Chen, Jason Bau, Charles Reis, Adam Barth, Collin Jackson, 18th ACM Conference on Computer and Communications Security, 2011. Speech “Improving the speed of neural networks on CPUs”, Vincent Vanhoucke, Andrew Senior, Mark Z. Mao, Deep Learning and Unsupervised Feature Learning Workshop, NIPS 2011. “Bayesian Language Model Interpolation for Mobile Speech Input”, Cyril Allauzen, Michael Riley, Interspeech 2011. Statistics “Large-Scale Parallel Statistical Forecasting Computations in R”, Murray Stokely, Farzan Rohani, Eric Tassone, JSM Proceedings, Section on Physical and Engineering Sciences, 2011. Structured Data “Dremel: Interactive Analysis of Web-Scale Datasets”, Sergey Melnik, Andrey Gubarev, Jing Jing Long, Geoffrey Romer, Shiva Shivakumar, Matt Tolton, Communications of the ACM, vol. 54 (2011), pp. 114-123. “Representative Skylines using Threshold-based Preference Distributions”, Atish Das Sarma, Ashwin Lall, Danupon Nanongkai, Richard J. Lipton, Jim Xu, International Conference on Data Engineering (ICDE), 2011. “Hyper-local, directions-based ranking of places”, Petros Venetis, Hector Gonzalez, Alon Y. Halevy, Christian S. Jensen,PVLDB, vol. 4(5) (2011), pp. 290-30. Systems “Power Management of Online Data-Intensive Services”, David Meisner, Christopher M. Sadler, Luiz André Barroso, Wolf-Dietrich Weber, Thomas F. Wenisch, Proceedings of the 38th ACM International Symposium on Computer Architecture, 2011. “The Impact of Memory Subsystem Resource Sharing on Datacenter Applications”, Lingjia Tang, Jason Mars, Neil Vachharajani, Robert Hundt, Mary-Lou Soffa, ISCA, 2011. “Language-Independent Sandboxing of Just-In-Time Compilation and Self-Modifying Code”, Jason Ansel, Petr Marchenko, Úlfar Erlingsson, Elijah Taylor, Brad Chen, Derek Schuff, David Sehr, Cliff L. Biffle, Bennet S. Yee, ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI), 2011. “Thialfi: A Client Notification Service for Internet-Scale Applications”, Atul Adya, Gregory Cooper, Daniel Myers, Michael Piatek,Proc. 23rd ACM Symposium on Operating Systems Principles (SOSP), 2011, pp. 129-142. | |

联发科呼啸归来:智能手机芯片卖疯了

作者 hid | 2012-10-31 16:09 | 类型 行业动感 | 6条用户评论 »

|

错失了智能手机市场初期的发展机遇后,曾经的山寨之王联发科已经彻底归来,智能型解决方案的出货量不断猛增,收入和利润也是节节攀升。 联发科总裁谢清江(Hsieh Ching-chiang)在昨日的投资者大会上表示,该公司的智能手机芯片在第三季度出货了3500-4000万颗,第四季度有望超过4000万颗。 分析人士则更加乐观,认为第四季度的出货量将会接近5000万颗。 谢清江表示,中国智能手机市场的迅猛发展,及其双核处理器的批量出货,都是取得这一成绩的关键因素。他还披露说,第三季度的出货量事实上已经超过了公司此前的预期,其中单核MT6575、双核MT6577所占比例最大,特别是后者已经获得了内地多家手机厂商的采纳。 谢清江称,联发科将于2013年第一季度出货其首款四核芯片MT6589,采用28nm工艺制造。 联发科第三季度收入294.7亿新台币(62.95亿元人民币),同比增长26.1%,环比增长25.7%,其中中国内地智能手机市场贡献最大;净利润为49.4亿新台币(10.55亿元人民币),环比增长47.2%;每股收益4.06新台币(0.87元人民币),环比增长37.6%;毛利率41.2%,环比增加0.4个百分点。 联发科预计第四季度收入289-309亿新台币(61.7-66.0亿元人民币),毛利率41-43%。 | |

HelloGCC Workshop 2012

作者 teawater | 2012-10-29 16:11 | 类型 行业动感 | 2条用户评论 »

|

http://linux.chinaunix.net/hellogcc2012/ 2012年11月10日, 我们将来迎来2012年HelloGcc 【活动简介】 如果你有什么问题,可以发送邮件到:hellogcc@freelists.org,或在ChinaUnix论坛的CPU与编译器板块发帖讨论相关活动事项! 【时间日程】 2012年11月10日(周六)下午 【演讲主题】 1、LLVM: Another Toolchain Platform 我们选择LLVM作为编译工具的实现基础。该话题演讲讨论的是一套基本的软件开发平台,我们会介绍LLVM系统结构,如何实现一个LLVM的后端,以及通过LLVM的MC层整合汇编器/反汇编器,并在最后简单讨论链接器和符号调试器。 2、GCC上的空间优化 3、淺談如何參與社群開發 4、更快的android模拟器 5、KGTP,GDB 和 Linux 6、MIPS SDK演示 | |

2012中国无线网络峰会 。 AeroHive专题报告

作者 陈怀临 | 2012-10-28 21:39 | 类型 行业动感 | 13条用户评论 »

“读图年代--读图咯” Beta发布,全力打造读图嘉年华!

作者 陈怀临 | 2012-10-28 16:07 | 类型 行业动感 | 8条用户评论 »

|

同学们, 你们久等了! (鼓掌。。。) 如果说陌陌,微信其实火爆的本质就是OND,N夜情,我们这些宅男宅女工程师休闲的时候的利器应该是什么? 我们如何在这个声色犬马的年代,保持自己的尊严,同时还能解决我们的激素问题? 我们拒绝那些无聊的OND! Say, NO! 答案是:读图年代! “读图年代--读图咯”隆重发布,为社会打造读图嘉年华! www DOT dutulo DOT com (读图咯)为你奉献美丽的视觉体验!美奂美轮! “每日推荐” 为你每天用户推荐的美图,你每天疲劳的时候,中午休息的时候,晚上冲浪的时候,打开WWW,打开你的iPad,平板,Android手机,你久享受长寿的时光[注:科学数据表明,看美图(含美女)一张,长寿5分钟,while OND一定是折寿] 我们目前通过机器学习,数据挖掘,为广大的工程师朋友奉献了如下栏目:

Last but apparently not least, 我们为在北美的同学完整打造新浪微博和鬼子的Facebook的互通。你可以一键把你喜欢的一个新浪weibo转发到你的Faebook的wall上,也可以在我们的”读图咯“完整的阅读你的Facebook feeds,然后一键转发到新浪weibo!!! 首创weibo和Facebook的duplex messaging! 为中美文化交流开辟了新篇章! | |

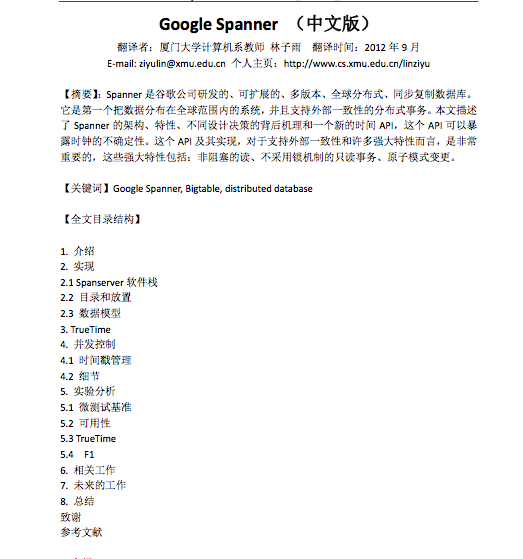

Google Spanner–全球分布式数据库

作者 陈怀临 | 2012-10-28 13:26 | 类型 科技普及 | 2条用户评论 »

《对中国电信公司华为与中兴引发的美国国家安全问题的调查报告》-调查部分

作者 陈怀临 | 2012-10-27 21:20 | 类型 行业动感 | 5条用户评论 »

系列目录 美国众议院对华为和中兴的调查报告II. 调查 A. 调查的范围

众议院特别情报委员会是负责美国的各项情报工作的部门,监督情报事务的各项活动并确保其合法,有效和是否获得了适当的资源,以达到保护美国国家安全利益的目的。具体来说,该委员会负责不间断的审查和学习研究情报工作各部门单位,各种项目和活动,并且非常严格的审查和研究的情报界的来源和方法。伴随着这个责任的是学习和了解美国所面临的来自国外的威胁,其中包括那些直接针对我们国家关键基础设施的威胁。同样,委员会必须评估来自外国情报活动的威胁,并确保美国的反间谍机构能聚焦并得到相应的资源,从而打败那些入侵活动。

情报委员会对于这次调查的目标是调查中国最好的两家通信企业给美国带来的潜在的安全风险,并检讨我们的政府是否已正确定位,理解和应对这一威胁。另外一个目的是要搞清楚和决定,在一个公开的形式下,需要提供什么样的信息来回答这两家公司在美国的电信市场里是否会构成国家安全风险。这种风险是可能带来美国关键设施的失控的。

美国的电信业显然越来越依赖于全球供应链提高设备和服务。这种依赖性存在着明显的风险,一些个人或团体 – 包括那些由外国政府支持 的- 能够和将会利用和破坏网络的可靠性。如果我们要保护网络的安全性和功能正常,如果要保护国家安全和防止针对这些电信网络的经济威胁,我们需要更好地理解供应链所面临的风险是至关重要的。(基于这个目的),这次调查的范围覆盖了使用风险控制的方法来管理整体供应链体系的基本需求。

最近的研究强调指出,中国发起的网络攻击比任何其他国家都更多。在一个针对网络间谍方面的公开报告中(美国)国家反情报机构的高层人士曾经解释过,“中国拥有世界上最活跃的和持久的经济间谍活动的人员。”。因此,这就是为什么情报委员会聚焦在对最有可能和中国(政府)有内部关联的(例如,华为和中兴)和那些寻求扩大在美国市场的中国公司。华为和中兴都是中国本土企业,在渊源上都和中国政府有关系。华为和中兴在美国都已经成立子公司,并都正在寻求扩大其在美国市场的份额。从目前来看,华为受到分析师和媒体的关注最大。由于这两家公司的性质相似,例如,和中国政府的潜在关联,来自中国政府的支持和都在推动其在美国扩大市场,委员会决定对华为和中兴一起做相应的调查。

华为和中兴通讯都坚称委员会不应只注重他们这两家公司,而不调查位于中国的生产和制造排除设备和部件的其他公司。委员会(确实)意识到,许多非中国企业,包括美国的科技公司,在中国生产制造他们的产品。但是,需要注意的是,产品在哪里生产对风险评估重要但不仅仅。一个公司的所有权,历史背景和正在销售的产品也非常重要。确实,华为和中兴不是唯一两个能对美国产生国家安全风险的公司,但华为和中兴是两个最大的由中国人创办的,中国人拥有的电信设备公司。而且并在积极寻求在美国网络设备市场的销售。因此,为了评估要(通讯设备)供应链的风险,委员会决定先把重点放在我们能看得见的漏洞上,并希望这次调查的结果对将来如何审查其他来自中国或者别的国家的公司对美国电信设备供应量的风险评估方面提供信息和依据。

B. 调查过程

委员会的调查过程包括对相应公司和政府官员的大量交谈,调阅许多相关文件,和来自华为和中兴的高级官员的公开听证会。委员会的工作人员审阅了相关公司的资料,会见了华为和中兴的官员并进行了长时间和深入的面谈。调查委员会工作人员还参观了公司的设施和工厂。

具体的细节是,2012年2月23日,委员会的工作人员在华为公司总部深圳,会见并采访了华为的高管。代表团参观了华为公司的总部,审查了公司的产品线,并参观了一个华为的大的设备生产工厂。参与讨论的华为官员为华为公司常务副董事长胡厚崑、财务管理办公室副总裁白熠、主管美国的华为公司高级副总裁陈巍、董事会秘书江西生、全球安全总监约翰·萨福克(John Suffolk0、出口管理部郝艺等。

在四月十二日,委员会的工作人员在深圳中兴的总部拜访了其高管。除了会议之外,代表团也简单的参观了中兴的总部大楼和其设备生产工厂。参与会谈的中兴高管包括中兴的朱进云(美国与北美国市场高级副总裁),范庆丰(执行副总裁和全球营销和销售副总裁),郭家俊(法务总监),独立董事石义德(Timothy Steinert),马学英(法务总监),曹伟(信息发布办公室安全与投资部),钱宇(信息发布办公室安全与投资部)和John Merrigan(DLA Piper律师)等。

2012年五月,情报委员会高级成员Ruppersberger,和委员会成员众议员Nunes,Bachmann,Schiff去香港会见了华为和中兴的高级官员。在这次会见中,调查委员会成员会见了华为的创办人任正非。

在这次会议后,调查委员会通过致函华为和中兴要求公司澄清一些书面问题和要求调阅一些文件,以补缺委员会对华为和中兴了解的信息不完整,公司一些前后不一致或不完整的答案,和需要一些公司确认的关于公司历史和现状的书面证据。遗憾的是,这两家公司都没有完全或充分响应委员会的文件请求。事实上,华为和中兴都没有提供委员会致函所要调阅的内部文件.为了回答剩余的问题,委员会要求每家公司来众议院出席公开听证会。

2012年9月13日,情报委员会举行了一次公开听证,华为和中兴的代表都有出席。其中包括华为公司高级副总裁和华为的美国代表丁耘和来自中兴的负责北美和欧洲的高级副总裁朱进云。这次听证会是很公平透明的。每个证人有20分钟的致词,然后在回答问题和听证的过程中,每位证人都有一个翻译的帮助以确保他们能最大程度上的完整的了解和真实的回答问题。

(我们)再次发现,(来自华为和中兴公司的)证人回答问题往往是模糊和不完整的。例如,他们声称不理解或不知道一些基本术语,无法回答公司内部的中国共产党委员会的组成问题,拒绝直接回答公司在美国的运作问题,试图避免回答他们过去在知识产权保护上的问题,并声称不理解或不了解中国政府可以强迫他们允许政府机构接触和检查他们的(电信)设备的法律,公司在听证会之后对委员会提出的问题记录的回答是同样的回避。

C. 调查面临的挑战

这份非机密报告包括了委员会在试图了解这些公司的性质,中国政府和中国共产党在公司内部的角色,和他们目前业务在美国运营时所获得的非保密信息。在这个过程中,委员会遇到了许多挑战,其中一些挑战对于许多试图理解中国政府和企业的关系,理解中国对我们的基础设施构成的威胁之间的关系时,是一模一样的。这些挑战包括:在中国,企业和官僚结构缺乏透明度从而导致缺乏信任;一般私营部门不愿意提高私有的或保密信息;如果私营部门公司或个人谈论他们的忧虑对被政府报复的担忧;和网络攻击的不确定的属性。

属于保密部分的附件提供了更多的信息,这些信息加重了委员会对国家安全威胁的担心。但为了避免危及美国的国家安全,这些密级的信息不会公开。但是,不保密的报告部分已经很明显的显示了华为和中兴都未能缓和委员会对他们持续的扩大在美国市场扩展而带来的重大安全问题的担忧。事实上,由于他们屡次未能彻底和明确的回答关键问题也没有对其答复提供可靠的内部证据,委员会对华为和中兴公司在美国其运营带来的国家安全担忧没有得到任何减轻。事实上,由于他们的阻挠行为,委员会认为,对华为和中兴带来的美国国家安全问题的考量是当务之急的。

除了委员会与公司的讨论,委员会的调查人员还采访了工业界的行业专家和两个公司的前任和现任员工。美国各地的公司都经历过当使用华为或中兴通讯的设备时发生一些奇怪的或者报警事件。这些(使用华为和中兴公司设备的)公司的官员总是担心公开承认这些事故将会有损于他们的内部调查和事故定位,损害他们防卫他们目前系统的努力,当然,也危及他们的工作。

类似的,华为或中兴前任或现任员工也都表述了公司设备存在漏洞,和有来自华为官员的不道德或非法行为。这些来自前任或者现任员工的言词由于担心受到公司的惩罚或报复而不敢公开。

此外,由于定位在美国境内的一个网络攻击是来自个人还是一个实体本身是一个非常有难度的技术,这对调查人员来说很难确定一个攻击是或者与中国的工业界,政府或者还是中国的黑客社区自己的行为。

| |

Weibo商业化潜力 。 2012年新浪微博用户发展调查报告

作者 陈怀临 | 2012-10-27 14:18 | 类型 互联网 | 2条用户评论 »

2012年最后一次北斗发射,长三丙送第十六颗北斗星升空

作者 高飞 | 2012-10-25 16:46 | 类型 行业动感 | 12条用户评论 »

系列目录 全球卫星定位系统

有点意外的是,这是一颗同步轨道卫星,因为之前已经发射过五颗同步轨道卫星,按照北斗的技术方案是已经够了。也许某颗同步卫星在天上需要个备份,这次就再打一颗。 此次发射的北斗导航卫星和“长三丙”运载火箭,分别由中国航天科技集团公司所属中国空间技术研究院和中国运载火箭技术研究院研制。这是长征系列运载火箭的第170次发射。 至此,北斗二代总共发射的十六颗星为:同步轨道卫星六颗,顺序为COMPASS-G2、COMPASS-G1、COMPASS-G3、 COMPASS-G4、COMPASS-G5、COMPASS-G6;中轨星五颗 COMPASS-MEO1、COMPASS-MEO2、COMPASS-MEO3、COMPASS-MEO4、COMPASS-MEO5;倾斜同步轨道五颗:COMPASS-IGS1、COMPASS-IGS2、 COMPASS-IGS3、COMPASS-IGS4、 COMPASS-IGS5。覆盖亚太区域,五颗中轨星也许够了;明年之后如果要逐步覆盖全球,就需要多打几颗MEO星了。 | |

TransOS:基于透明计算的云操作系统

作者 interlaken | 2012-10-24 11:23 | 类型 行业动感 | 29条用户评论 »

|

就在作为舶来品的“云计算”热浪余热未消时,10月出版的最新一期《国际云计算杂志》(International Journal of Cloud Computing)以长达百余页的专辑形式介绍了我国科学家研制的新型云计算操作系统TransOS,给了IT业界一个“意外”,引起国际科技新闻界的广泛关注。 文章下载地址:http://pan.baidu.com/share/link?shareid=89396&uk=3389271398 在题为《TransOS:基于透明计算的云操作系统》的论文中,中国工程院院士、中南大学校长张尧学首次向国际业界全面介绍了新一代网络化操作系统TransOS:它将包含传统操作系统、应用程序和数据的“代码”全部存储在一台服务器(云)上,允许多台只装有少量代码的“裸机”连接访问,用户只需动态调用必要代码即可运行。在该组专辑其他文章中,来自清华大学、英特尔公司以及日本和加拿大的研究人员分别从数据管理、实现案例、移动和嵌入式设备上的应用及隐私保护模式等方面对该操作系统进行了详尽讨论。 TransOS基于“透明计算”的理念研制。该理念最早由张尧学于2004年提出,其核心是将存储与运算分离、将软件与硬件(终端)分离,通过有缓存的“流”式运算,将计算还原为“不知不觉、用户可控”的个性化服务。在这种模式下,操作系统被视为一种网络资源从终端“剥离”。 这一变化导致了诸多改变的发生,使TransOS成为了名符其实的“管理操作系统的操作系统”,它不仅占用资源更少、可靠性更高,更具有谷歌Chrome等类似云操作系统所不具备的跨平台、跨设备操作的优点,不仅可在个人电脑、服务器、智能手机、平板电脑乃至智能家电上运行,而且适用于苹果、谷歌、微软等公司开发的不同平台,从而打破了不同“云”之间的垄断和分割。 张尧学告诉记者,尽管TransOS对经典的冯·诺依曼计算机体系结构进行了“革命性改进”,但在网络足够快的条件下,用户几乎感觉不到后台这种变化的存在。 该组文章发表后,国际知名新闻媒体《每日科学》(ScienceDaily)、《技术视野》(TechEYE), 《每日技术新闻》(TechNewsDaily)等媒体分别以《在云中的操作系统:TransOS或将取代传统桌面操作系统》,《中国人希望把计算机大脑放在云中》,《研究人员将操作系统推送到云中》等为题进行了报道。 对TransOS的应用前景,张尧学保持了谨慎的乐观。他向记者表示,TransOS目前还不会对现有的桌面式操作系统造成威胁,但会派生出许多新的终端、产生大量新的应用机会。他同时坦承,由于TransOS对网络带宽提出了更高要求,这将使对高速互联网的需求变得更为迫切。 张尧学 中国工程院院士,教授、博士生导师,中南大学校长(副部长级)。解放军总装备部军用计算机及软件技术专业组专家,国家信息化专家咨询委员会、计算机学会普适计算专委会委员,《International Journal of Wireless and Mobile Computing》、《Journal of Autonomic and Trusted Computing》、《Chinese Journal of Electronics等国内外学术期刊的编委。 文章摘要:云计算现在已经成为一个研究热点。在众多研究的问题中,云计算操作系统吸引了大量人们的关注。但是到目前为止,人们仍然无法回答什么是云计算操作系统、如何开发云计算操作系统。本文从透明计算的视角提出了一种云计算操作系统——TransOS,它将包含传统操作系统、应用程序和数据的“代码”全部存储在一台服务器(云)上,允许多台只装有少量代码的“裸机”连接访问,用户只需动态调用必要代码即可运行。TransOS管理所有的资源,为用户提供包括操作系统在内的完整的服务。本文首先介绍透明计算的概念作为本文的背景,介绍透明计算的特性。然后本文介绍了TransOS的设计结构,最后距离说明了TransOS的应用。 | |

(7个打分, 平均:4.29 / 5)

(7个打分, 平均:4.29 / 5)